The future of web content: Where AI, user preferences, and SEO meet

- Mordy Oberstein

- Jan 4, 2024

- 20 min read

Updated: Jul 31

Author: Mordy Oberstein

There is a revolution occurring: The relatively new widespread availability of AI content has sparked renewed conversations around content quality, and it’s not an understatement to say that it is reshaping everything we know about web content and SEO.

The mere notion of AI-written content (let alone the various “scandals” surrounding large brands that have implemented it) has led more users to scrutinize the quality of the content search engines are sending them to. This conversation (ironically one that is very often without words) is redefining what our audiences expect from the content we’re creating. It’s one of the least discussed aspects of the “AI wars” and it comes at a time when content consumption trends are already on the move.

So, let’s have this conversation and take a hard look at how web content is changing and how the greatest source of web traffic on earth, Google, has already been adjusting and will continue to adjust.

Buckle up.

Table of contents:

The accelerated future of web content: How we got here

For years, SEOs have been talking about the need to double down on web content. I trace the height of this conversation all the way back to Google’s Medic Update (AKA the August 2018 Core Update). This update was the first time the “modern-day” conversation around Your Money or Your Life (YMYL) sites really came to the forefront (in the context of what was then Expertise, Authoritativeness, and Trustworthiness [E-A-T, now E-E-A-T]).

To me, this is because there was a marked shift in what Google was able to do in terms of distinguishing quality content from the chaff of the web. It was a qualitative leap forward for Google’s ability to parse content quality.

Was it perfect? Not at all. But, it was the beginning of a very slow burn towards raising the standard on what could and could not rank well on Google’s SERP.

Expectations outpaced Google’s ability to identify quality content

In fact, it was such a slow burn that public perception began to outpace Google’s technological abilities. I would say circa 2021 publications started to release articles calling out the quality of Google’s results.

I have meticulously tracked the outcomes of Google’s algorithm updates for the better part of 10 years. The notion that the results were getting worse is almost nonsensical (this is a different question from “Are the results good enough?”). Personally, I (as well as others such as Lily Ray and Glenn Gabe) have seen Google do some amazing things with regard to its understanding of content quality.

Improving the technology behind the algorithm, as I mentioned, is a slow burn. What I think happened, and it’s something former Googler (and also former CEO of Yahoo!) Marissa Mayer alluded to, is that our expectations outpaced what Google was able to do from a technological standpoint. As the number of allegations against big tech companies increased (often resulting in them appearing before Congress), so too did the skepticism around the content we were consuming on the web.

In other words, the content incentive cycle at this point had hinged almost entirely on the search engine’s quality thresholds. If Google was able to parse out quality content to the Nth degree, then content creators and SEOs produced content to match that degree of quality. If Google could parse out quality to Xth degree, then we shifted and slowly started to create content that met the new quality threshold. But, very rarely did “we” think about the ultimate needs of the user and their demands. The algorithm became the North Star.

This inertia was disrupted by an outside voice—namely people becoming far more skeptical about the content they were consuming as more and more CEOs showed up to explain themselves in front of Congress.

Generative AI adds fuel to the fire

It’s safe to say that generative AI has exacerbated this issue. The ease with which volumes of content can be generated (and the questionable accuracy of that content) has taken people’s skepticism and injected it into their veins like Barry Bonds juicing up to hit another home run (for the record, it has not been proven that Barry Bonds took steroids).

What AI did to the great content conversation amounts to throwing a propane tank onto what was already a house on fire (i.e., web content).

The net result is the emergence of new content trends—the most notable of which has been this consensus that audiences want content from actual people, not brands or corporations.

There are all sorts of studies and surveys showing that the “young folks” prefer TikTok to more “traditional” mediums, such as search. Even SEOs have gotten on this bandwagon and have begun talking about TikTok as a source of content ideation.

“‘BookTok,’ ‘cottagecore,’ ‘hair theory’—the list goes on. None of these terms existed before TikTok emerged, but they now have a huge impact on how people search across platforms.” — Abby Gleason, SEO Product Manager

This trend obviously didn’t arise in a vacuum. Nor is it all about “AI”—again there was a serious buildup to this moment that, in my opinion, has been brewing for years (the very “formative” years of the web’s younger users).

What I am saying is that the shift in content consumption trends is very real and it will continue to get increasingly “real,” real fast as we’re not anywhere close to the end of the “AI content conversation” (again, it’s less a conversation and more Thanksgiving dinner with your dysfunctional family).

Now that we know where we are and how we got here (a very underestimated facet of dealing with the web and its future), what does that mean for content generation?

The future of content creation: How to align

I want to offer some concrete content strategies that I think will become more prominent going forward. I feel that a lot of the dialogue I see out there around “content by people, for people” is oversimplified (which is usually the trend with these sorts of things).

Instead of identifying the demand and abstracting out from there to develop concrete tactics, we (as digital marketers) tend to get very “linear” with things. We focus more on a tactic than on the underlying principle we can then apply in a variety of ways.

In our case, there’s been a heap of talk about “influencer content” as again, people want content from people, not corporations. To me, that is akin to not being able to see the forest for the trees. Aside from the superficiality, the content format simply doesn’t apply to half the content out there. What are you going to do? Ask Kim Kardashian to record a video for every landing page on your site?

Let’s instead focus on the underlying concept: People don’t want to be spoken at; they are looking to engage on a more personal level, as this fosters a sense of trust, security, and overall quality.

It’s entirely possible to meet this need without leveraging some form of influencer marketing. Below are two ways that come to mind.

Situational writing: The value for users and SEO

I want to challenge the idea of “creating a personal connection” between the content and its audience.

Yes, the most obvious way to make that connection is with content from actual people speaking directly to your audience (much the way a TikTok video could). However, if the North Star conceptually is not a media format, but instead simply creating content that directly and substantially engages with the audience, then the possibilities are, in a way, endless.

In other words, you need content that makes a connection. One very powerful, yet hardly discussed way to show you are thinking about the reader and their pain points is to predict their needs. Predicting the needs of your readers (and being quite explicit about it within your content) is, however subtly, having a conversation with your audience.

A fabulous way to do this is with what I call “situational content.” It’s not very complicated but I think it’s very powerful.

Imagine I’m writing an article about how to get your kids to bed (a pain point for me, no doubt) and in this article, I offer five ways to get your kids to bed quickly. They might be good tips but I’m not conversing with you. I’m speaking at you or (at best) to you.

Now imagine I write the same post but for each tip, I run through the scenario that the tip doesn’t work under and then offer you a follow-up recommendation. Lo and behold, we are having an implicit conversation. I offer the tip. I assume you say it didn’t work and then reply back to you with another follow-up tip. There’s an implied conversation going on. A latent back and forth.

Situational writing assumes an implicit reaction on the reader’s part and latently incorporates that dialogue into the content itself. This way, I’m communicating with you by assuming your response. The net result is conversational content.

From an SEO perspective, it’s not fundamentally possible to create situational content without either having first-hand knowledge and experience related to the topic or a high level of expertise. Thus, situational content is rooted in strong E-E-A-T and (all other things being equal) would align with the signals Google uses to synthetically align with strong E-E-A-T.

The case for taking a conversational tone with your content

What about your typical landing page? How do you connect to your audience and have a conversation with them via content like your run-of-the-mill landing page?

Again, you’re not going to embed some influencer’s video short onto all your landing pages. I mean you could, but folks are going to see right through that. This is especially true as time goes on and readers become inundated with influencers here, there, and everywhere.

To me, this really highlights the fundamental problem in a lot of the advice around “the future of content.” Yes, people want to hear from actual people and not brands, but as a brand you can just stick influencers into everything you do. But, most of the pages on the web probably don’t align with that very generic advice.

Now, you can do things like feature customer reviews and social proof on a page to build trust. But trust isn’t necessarily conversation. If the goal is conversation, then this won’t work.

What to do, what to do?

The first thing I think to do is to accept the reality that you cannot have a substantial level of conversation with every asset or every web page you create. That’s just not how the internet works.

Sometimes, the best thing you can do is to be as conversational as possible. And I’ll tell you the hard truth: We all want to have whatever influencer pitch our product for us, but we don’t want to take our foot off the acquisition gas pedal in any way, shape, or form. However, this is precisely what I’m advocating for.

If our audiences want to receive more conversational content, then we as content creators and contributors should offer them a more conversational tone.

Content is going to become increasingly conversational. You know those old used car commercials you see in movies from the 1970s? That’s what a lot of our content—particularly acquisitional content—is going to sound like in a few years from now. It will sound like an overly overt sales pitch because it is fundamentally an overly overt sales pitch.

Marketing catchphrases, buzzwords, and subliminal overselling are going to stand out, but not in a good way. It will feel (if it doesn’t already) like the content is talking at you and not even to you—let alone with you.

Again, part of the demand for conversational content is the skepticism behind our current state of content. Pages that don’t align are going to be treated with mass skepticism by the target audience. As counterintuitive as it may sound, take your foot off the acquisitional gas pedal and give your audience some breathing room by taking a more explanatory (and therefore conversational) tone with your content that aims to sell.

Sometimes you can have an actual conversation with your audience and sometimes the best you can do is create a situation where the audience is willing to hear you. As time goes on, taking a more conversational tone with your acquisitional content will enable your audience to lay their guard down so that they can assimilate what you are trying to convey.

The future of content on the Google SERP

So, how does web content rapidly changing (for the reasons I outlined above) play out on the SERP? I predict that:

Google will meet the demand for experience-based content

Language profiles and parsing are the future of search—not SGE

Google will meet the demand for experience-based content

It’s not a matter of “if,” it’s just a matter of “how” and “when” (and really how effectively). It’s really not a complicated equation: If Google doesn’t meet user demands, users will go somewhere else.

You can argue that Google has already begun exploring these waters. From adding an additional “E” for “experience” to E-E-A-T to the role of first-hand experience in the Product Review updates, Google now leans heavily into a focus on first-person knowledge.

This trend is all the more obvious when you look at some of Google’s more recent tests and announcements. Just to mention a few:

Google began testing “Notes” in 2023. Regardless of how effective you think the feature may be (I’m a little skeptical myself) it’s a clear sign that Google wants real voices on the SERP.

In mid-November of 2023, Google talked about notifications for topics you are following. What does this have to do with a more “personal” take on the SERP? I speculate that the sort of content found on forums and microblogs would be a perfect fit.

Speaking of forums, Google said they are looking to make algorithmic changes to better “surface hidden gems.” This is basically Google looking to reward actual human perspectives and experiences from social media and from forums (and the like) on the SERP.

Continuing with forums, Google also announced it would support structured data markup that would result in rich results for content pulled from “social media platforms, forums, and other communities” meant to propagate first-person knowledge and perspectives.

Google’s ability to do all of this effectively is a legitimate question. As I said above, for me it’s just a matter of “when” Google will get it right—not “if.” It has no choice but to get it right. The demand for first-person content is only going to increase. Google understands how the AI conversation is a driving force behind this and is looking to balance out the SERP accordingly. If it doesn’t get this right, users (particularly younger users) will flock to platforms like TikTok.

The future of forums and the SERP

Yes, the above is an H4—it’s that kind of post. I want to address “forums.” For SEOs, the second we hear the word “forum,” all we think about is spam and ranking manipulation.

I want to challenge our a-priori notions here just a tad.

We associate “forums” and “SEO” with all sorts of spammy and less-than-legitimate practices. I would be very cautious of thinking about forums based on our experiences of the past so as not to throw the baby out with the bath water.

I don’t believe I am being controversial by saying “social media is not what it used to be.” Online communities have been seeking refuge in a host of platforms like Slack, WhatsApp groups, etc. as social media (to an extent) has lost a bit of its perceived luster.

What happens as Google starts to rank content from communities more regularly and (as Wix’s own Kobi Gamliel pointed out) when online community organizations realize they can use their own ecosystem and not someone else’s?

The incentive cycle on the web over the past 20 years has revolved around what Google shows on the SERP. If Google presents more forums, it will spark people to start forums.

This doesn’t mean that what a forum was 20 years ago will be what a forum is today vis-a-vis the SERP. Creating genuine E-E-A-T with a forum, or a microblog, or whatever else is going to come into focus and become more of a part of a website’s organic strategy.

Language profiles and parsing are the future of search—not SGE

There, I said it.

The future of the SERP is not SGE (Search Generative Experience). I am not saying that SGE won’t be a part of the future of the SERP, but I see it more as a facilitator than anything (I’ll elaborate on that in just a bit).

The future of SERP at any point is dictated by two factors:

The desires and behaviors of users

The technological ability to meet those desires

We’ve already talked a lot about user desires and demands. Now, we need to talk about Google’s ability to meet them—SGE is not that.

SGE, if I can be a bit brash, was a response to Bing’s AI chat beating Google to the punch. A punch that did not have much “oomph” to it, at least not for Bing as it did not increase the company’s market share. Don’t think for a minute Google did not take notice of that little fact.

Let’s go back to the root of users’ demands. Because of various developments, including the proliferation of “AI this” and “AI that,” users are far more skeptical about the content they see on the web. They also want to be in greater contact with personal experience via their content consumption, which aligns with the need for trust in an ocean of skepticism. Think of it like the B side of a record (and… I just lost my audience under the age of 30).

Which one of those problems does SGE solve, exactly? Neither.

Let’s discuss two actual solutions.

How Google fundamentally rewards experience on the SERP

Let’s start with experience on the SERP. Aside from pulling in more content from social media or forums, etc., how can Google adequately reward “experience” within the core search results?

We don’t really need to look into a crystal ball for this: Google has already been rewarding experience with the Reviews update (where it calls for first-hand experience) and it is my belief the search engine has been doing the same within the core updates, the Helpful Content Updates (HCU), and so on.

The question is: How?

It’s not very far-fetched when you get into it. Machine learning is essentially built to profile language and language structure. I always go back to a statement John Mueller made back in 2019:

“It is something where, if you have an overview of the whole web or kind of a large part of the web, and you see which type of content is reasonable for individual topics then that is something where you could potentially infer from that.” — John Mueller, Senior Search Analyst at Google

Countless tests, experiments, and breakthroughs in using machine learning to dissect language patterns have been published over the past few years. It’s what machine learning was built to do.

In terms of ranking experience-based content, how does this play out on the SERP?

Let’s use a product review example in line with the Reviews update. For argument's sake, assume we’re reviewing a vacuum cleaner.

By default, I might lean on some relatively generic language, such as “It was a great vacuum cleaner but didn’t do well on carpet.”

Conversely, if I am relatively good at communicating my experience to others (in this case, about using the vacuum), I would be more apt to use language such as “Great on carpet but I had a difficult time sucking up cat hair.”

The language structures here are worlds apart. The level of modification and even the first-person language easily sets the latter expression apart from the “generic” content I created. It is not far-fetched to imagine that Google can implement machine learning to better score the likelihood that the content’s language structure indicates firsthand experience.

Language structures and profiles are (and will be) an increasingly important component of what Google does to decipher overall quality and include firsthand experience within the content it shows on the SERP.

This is perhaps best expressed by Pandu Nayak, Google’s VP of Search, who (at Google’s antitrust trial) was quoted as saying:

“DeepRank not only gives significant relevance gains, but also ties ranking more tightly to the broader field of language understanding.” — Pandu Nayak, VP of Search at Google

For SEOs, it all brings what our mothers have been telling us for years to the forefront: watch your language.

I think this is true across the board for all emerging content trends Google looks to align with. The only real tools it has at its disposal are profiles—both language profiles and user behavior profiles. The combination of the two can be very powerful.

To highlight this, I’ll offer you an overly-simplistic example: Let’s assume that the trend for more creator voices within content continues to the point where content creators are utilizing more first-person language. Now let’s assume some of this content ranks.

Accordingly, we have content that does include the creator’s voice and the content of yesteryear that generally does not.

Now, assume that when users click on a page and see the typically stoic content, they bounce back the SERP in favor of content that does include the creator’s voice (read: first-hand experience). Machine learning systems, like RankBrain and DeepRank, could (in theory) identify this type of first-hand language and create a new profile for that subset of topics. Namely, a profile that indicates “creator voice” must be part of the language structure.

This is, in a sense, what has been happening on the SERP since about 2015. We’ve only seen this process expand its reach and impact, and we are only going to see what I think are huge advancements here.

How Google can handle skepticism around content

There’s a lot more to addressing user skepticism (and downright cynicism) than just firsthand knowledge and experience.

Fundamentally, it boils down to offering a quality content experience to the user (again, firsthand knowledge being a part of that when necessary). The question is, how do we parse “a quality experience” in the context of increased skepticism around web content?

We parse it with parsing.

Users need to know that Google is offering them results—not just presenting a spattering of content for them to wade through. Users then need to feel that the content itself speaks to them and their specific needs.

To put it more concretely, if I feel like I am presented with content that has my specific needs in mind or if I am presented with an ecosystem (such as the Google SERP) that has my specific needs in mind, then I am more inclined to trust what I am consuming.

To that end, specificity is key. The need for specificity speaks to my point about SGE being somewhat of a distraction in a way (and in other ways, not, which I’ll elaborate on shortly).

In 2021, Google introduced us to MUM (Multitask Unified Model). A big focus around the “MUM conversation” has been its ability to unify content mediums. For example, MUM enables you to take a picture with Google Lens and add a text input as well (so you can, for example, take a picture of a pattern you like and ask Google to find socks with that matching pattern).

When Google demoed MUM for the first time, it also showed us how MUM could theoretically handle a search query. The example query Google used was “I’ve hiked Mt. Adams, and now want to hike Mt. Fuji next fall, what should I do differently to prepare?”

Of course, SEOs were awed by MUM’s ability to parse the various aspects of this complex query. What struck me, however, was how MUM dealt with the very simple phrase “to prepare.” To quote Google, MUM “could also understand that, in the context of hiking, to ‘prepare’ could include things like fitness training as well as finding the right gear.”

Topical parsing is how you bring specificity to the SERP. It takes a mundane query like “go to yankees game” and morphs the results from a bunch of links to buy tickets to content around transportation to the game, what the game experience is like and what to expect, what costs are involved with going to a game, etc.

In order to deliver a targeted experience to users, you have to be able to parse the topic. And while MUM is not terribly integrated into the algorithm in this regard (as of yet), it and other machine learning technologies offer Google a tremendous amount of potential.

Elements like MUM, to me, should be the focus of the SERP and the focus for SEOs and content creators, not an SGE box.

Content portals: How SGE can help

Based on the need for stronger topical parsing, the future of the SERP, to me, lies in content portals (i.e., the ability of the SERP to facilitate the deep exploration of the various aspects subsumed under a given topic).

A portal-like experience would enable me to go down the rabbit hole and come back out just so I can go down a new one. Continuing with Google’s “hiking” example, I could explore the training regiments I would need to “prepare” with a wide variety of media and resources, and then explore “preparing” vis-a-vis equipment needed in the same way, without friction.

This is where SGE can effectively come into play. SGE as the end product doesn’t entice me. I don’t think it fundamentally aligns with the core needs of the users with regard to where content (as a commodity) is heading. What it does provide is a nice starting point accented by more entry points.

SGE, as a facilitator, is extremely interesting to me. What I think SGE can do very well is present the user with a quick, contextual look at the topic and then facilitate the portal-like exploration I’m staunchly advocating for.

If I search for [I’ve hiked Mt. Adams, and now want to hike Mt. Fuji next fall, what should I do differently to prepare?], SGE could provide some basic content about the various types of hiking each mountain requires, etc., and then present cards that would enable me to explore various facets, such as the numerous ways to prepare for the hike.

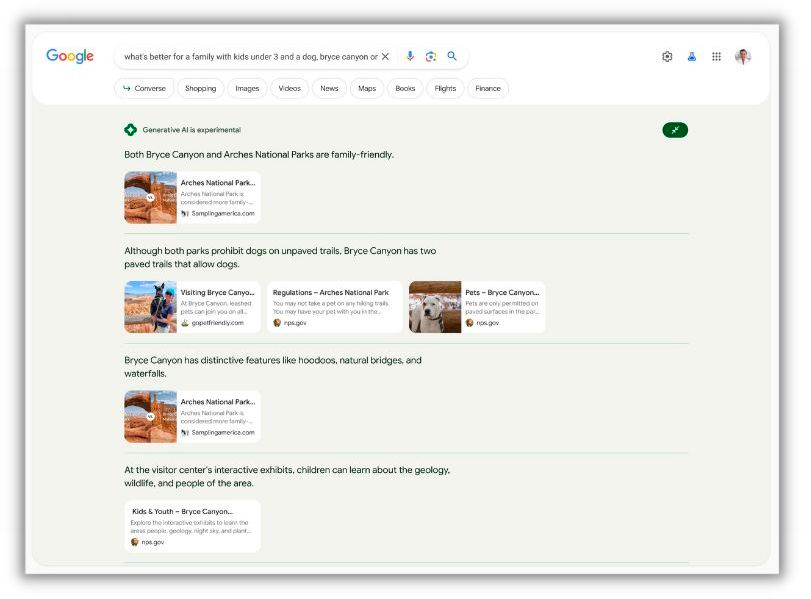

In fact, when you look at SGE’s initial phase, we can see the early signs of this. Initially, SGE had a secondary section that broke down the initial summary into its smaller parts, thereby parsing the overall topic with accentual link-cards.

As SGE has evolved, you essentially have the same result via the link attribution format that allows for the revealing URL cards within specific sections of the generated copy.

This acts like a very lightweight entry point to the various subsets of topics covered within SGE’s synopsis. While this is a long way away from being an actual portal that allows for genuine exploration, it has potential. More so, it points to what I think is the proper way to leverage SGE on the SERP.

Where I think you really see this concept shine through is in Google Gemini. In a demo, Google shows that it:

A) Creates custom layouts tailored to best fit the results so that they align with the query’s intent B) Allows for users to ask follow-up questions related to a specific piece of information provided within the initial response

Both of these characteristics align with the notion I am proposing. A result layout customized to fit the intent of the user is entirely about putting topical exploration at the forefront of the overall experience. Being able to follow up on information (parenthetically, this is another positive of SGE in general) is again, facilitating a tailored exploration experience.

The net result is more user engagement as well as less content apprehension (read: skepticism). There’s no doubt in my mind that Gemini will be an ever-increasing factor on the SERP and it has real potential to engage with users and web content and not to simply “replace” it.

The bottom line is: a generative AI experience has a place on the SERP and (should it facilitate parsing topics and user exploration) it has the potential to tackle some of the more substantial latent user concerns.

The human touch and its role in content

Existential and psychological factors play a far greater role in content consumption than we typically give credit for. Imagine I wrote a piece of content and by some great cosmic coincidence, AI wrote the same exact piece of content. Now imagine I told you that you had to choose which one to read. Would you choose the piece written by AI or the one I wrote?

I’ve asked this question to a dozen people over the past few weeks and, every time, the answer is that they would read my version of the content. I don’t think these people were trying to placate me. I think they were alluding to fundamental reality—we want to feel a human connection. So, yes, if you read the AI version of the content in this wild scenario, you would not miss anything in terms of content, but you would not feel any connection to the source of the information.

There is an underlying and quite legitimate need to touch the source of the information we consume. It is fundamentally why we care to know if something was or wasn’t written by AI. We don’t care so much because of the quality but because of the connectivity. Does the content have a source that I can see? That I can somehow connect with even in a very sublime way?

This very basic but powerful human need is at the core of the content trends I’ve discussed here. It’s also why there is (in my mind) no chance that AI-written content will pervasively replace human-generated content. In a way, the improper use of AI to write content undermines this need.

The future of web content isn’t some new direction or technology. It’s quite old, if not as old as time itself. The future of web content, now more than ever, is in its ability to bring parties into contact and connection with each other.