Hello,

I'm using a function to bypass limit of 100 / 1000 items to query. In fact, i'm using it to populate a fetch option. It's working in backend, used by a jobs.config CRON expression configuration.

export async function retrieveVariantItems() {

let results = await wixData.query("Stores/Variants").limit(100).find();

let allItems = results.items;

while (results.hasNext()) {

results = await results.next();

allItems = allItems.concat(results.items);

}

return allItems;

}Then it's integrated into searching options to fetch :

export async function refreshShop() {

await retrieveVariantItems().then((results) => {

console.log(results)

let variants = results.map(a => ({ productId: a.productId, variant: a.variantName, variantId: a._id, productName: a.productName }))

console.log(variants)

variants.forEach((element) => {

fetch("https://MYAPI/" + element.productName, /.../

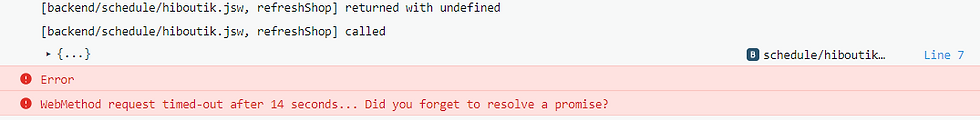

/.../When using it into the execute trial into the wix editor, and i get this error.

For sure, if i repace my function by :

wixData.query("Stores/Variants").limit(100).find();It does work, but i can't look for every items...

In addition, i have about 5000 variants ! I hope there's nothing to do with the number of items !

Ok i tried something else with set interval ! I think it works, because i do get back more queries (if there's a limit, i don't see it!)

import { fetch } from 'wix-fetch' import wixStoresBackend from 'wix-stores-backend'; import wixData from 'wix-data'; export async function refreshShop() { let index = 0 let MaxIndex = 100 wixData.query("Stores/Variants").limit(MaxIndex).skip(index).find().then((queryResults) => { console.log(queryResults) toFetch(queryResults) setInterval(function () { if (queryResults.hasNext()) { index = index + 100 toFetch(queryResults) } }, 1000) }) } /* export async function retrieveVariantItems(page) { let results = await wixData.query("Stores/Variants").limit(100).find(); let allItems = results.items; while (results.hasNext()) { results = await results.next(); allItems = allItems.concat(results.items); } return allItems; }*/ export function toFetch(queryResults) { const credentials = Buffer.from("XXXXXX", 'binary').toString('base64') const auth = { "Authorization": 'Basic ' + credentials, "Content-type": "application/x-www-form-urlencoded" } console.log(auth) let variants = queryResults.items.map(a => ({ productId: a.productId, variant: a.variantName, variantId: a._id, productName: a.productName })) console.log(variants) variants.forEach((element) => { fetch("https://affaires.hiboutik.com/api/products/search/XXXXX=" + element.productName, { "method": "GET", "headers": auth, }).then((httpResponse) => { if (httpResponse.ok) { return httpResponse.json(); } else { return Promise.reject("Fetch did not succeed"); } }).then(json => { if (json.length > 0) { console.log(json) let productId = json[0].product_id let sizeType = json[0].product_size_type fetch("https://affaires.hiboutik.com/api/sizes/" + sizeType, { "method": "GET", "headers": auth, }).then((httpResponse) => { if (httpResponse.ok) { return httpResponse.json(); } else { return Promise.reject("Fetch did not succeed"); } }).then(it => { console.log(it) let size_Id = it.map(a => a.size_id) if (size_Id !== undefined) { size_Id.array.forEach(sizeId => { fetch("https://affaires.hiboutik.com/api/stock_available/product_id_size/" + productId + '/' + sizeId, { "method": "GET", "headers": auth, }).then((httpResponse) => { if (httpResponse.ok) { return httpResponse.json(); } else { return Promise.reject("Fetch did not succeed"); } }).then(thing => { if (thing !== undefined) { if (thing.length !== 0) { let item = { "trackQuantity": true, "variants": [{ "quantity": thing[0].stock_available, "variantId": element.variantId, "inStock": true }] }; wixStoresBackend.updateInventoryVariantFieldsByProductId(element.productId, item); } } }).catch(err => console.log(err)); }) } }).catch(err => console.log(err)); } }).catch(err => console.log(err)); }).catch(err => console.log(err)); }No. Unfortunately, there is no way to have a job lasting more than a minute or so (not even a warranty).

Another way is to have your cron job host on another service and call each page from your other service with a longer timeout (but you still need the pagination) If you want to do a loop, you need to loop over all items, flag each item as processed somehow (in a sync database). Then on your next cron batch, process all items that have not been flagged yet and restart.

Thanks for the link, i see what you mean, looking for new page everytime with a variable. I'll try it out, but i mean, there is no way to loop through that right ?

I was thinking about aggregation too, but it's timed out, it means theres is too much items processed (it's only the while function that times out)

@Edouard Ledeuil have you read Wix backend limitation? https://support.wix.com/en/article/velo-about-backend-limitations The solution here is to paginate your update so that you don't run into a timeout. Something like

exportasyncfunction refreshShop(page=0){ return retrieveVariantItems(page).then((pageResult)=>{ let variants= pageResult.map(a=>({productId:a.productId,variant:a.variantName,variantId:a._id,productName:a.productName})) variants.forEach((element)=>fetch("https://MYAPI/"+element.productName,/...)Since there is no way of knowing when the scheduled job will stop, you need to keep track of what was the latest page product that was synchronized and pick up the update on the next round. a bit tedious but doable.

Is this clear?